This is the seventh article in my series of posts on DevOps. SIf you have not read my previous posts, I recommend you do so before reading this one, so that you understand understand how Lean thinking has influenced the DevOps movement and the implications of changing the size of work packages in the flow of value delivery to the customer, for example.

At the very moment we start to talk about value delivery flow, a new element comes into play in our system. That is, the moment we establish that the development of software projects fits into a model based on value delivery and is actually a flow management problem, other management techniques make sense and can be applied to software development.

In this case, we are talking about the Theory of Constraints (TOC).

This theory was developed by Eliyahu M. Goldratt in his book called The Goal. I am not going to go into too much detail about its content, but I do recommend you read it. It is a novel, which makes it very easy to read, and a real eye-opener. Keep in mind that this novel inspired the book The Phoenix Project by Gene Kim, a reference text within the DevOps world. As a matter of fact, this book is a “translation” of The Goal from the manufacturing world to the IT world. Anyone reading this book who is associated with the IT world will immediately identify with it and think it was written for them.

Below, I will briefly describe the Theory of Constraints, and throughout this post I will explain why the Theory of Constraints is so important to the DevOps world

The Theory of Constraints

The theory itself is very simple. Broadly speaking, it states that in a value delivery system there will always be a constraint, and this constraint slows down the flow.

There are many “popular sayings” that implicitly support this theory. For example, “the chain is only as strong as the weakest link”, “a group is only as fast as its slowest member”, etc.

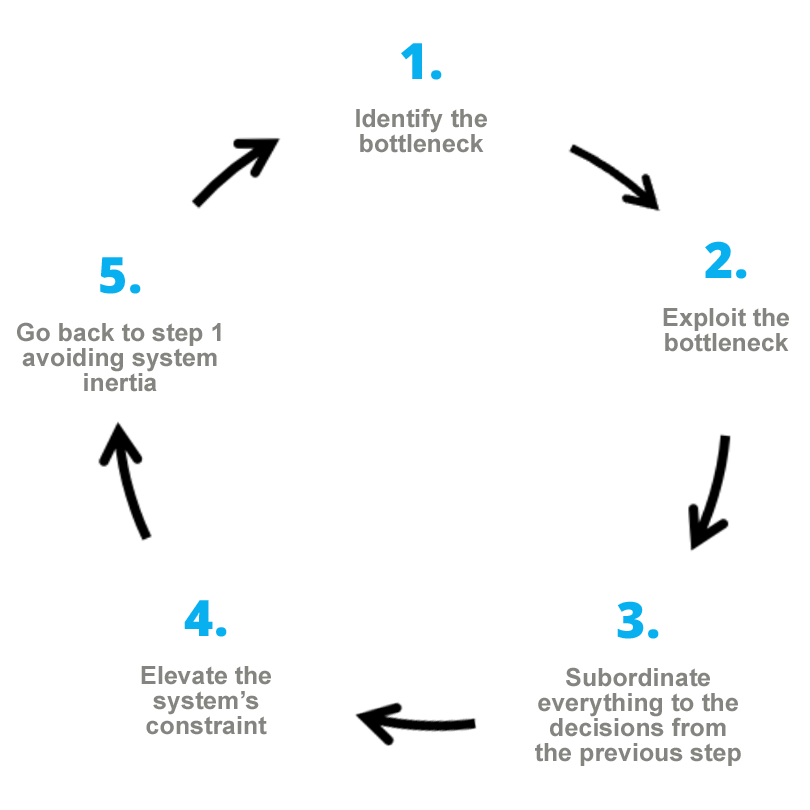

In practice, what this is talking about is the existence of bottlenecks within the system that are limiting our ability to deliver value, which can otherwise be managed by applying this theory. To do this, the theory sets out its Five Focusing Steps. These five steps, which we will explain later, are the following:

This design is very simple, but the really important thing is that, applied in a systematic way, it leads to a process of continuous improvement as we will always have to look for the constraint and improve it. Because one of the fundamental pillars of DevOps is a culture of continuous improvement, it is easy to understand the enthusiasm it generates among DevOps supporters.

” The important thing about the Theory of Constraints is that, applied in a systematic way, it leads to a process of continuous improvement. ”

Practical application of the Theory of Constraints

Let’s now take a closer look at these five steps (always from my personal point of view):

1. Identify the bottleneck

The first step in the procedure is to identify the bottleneck. We should always be measuring the throughput and cycle time of our system and asking ourselves why we don’t have more throughput or why the cycle time is not shorter. The simple formulation of this question will help us think about where the bottleneck in our value delivery flow might be.

As we saw in a previous post, using Value Stream Maps in DevOps is essential. And at this improvement point, it is essential to understand that Value Stream Map clearly, because it will greatly help us to identify where the bottleneck is. And if we support our Value Stream Map with a Kanban system, it will be even easier to identify the bottleneck. In the same way that in a manufacturing process the inventory of parts will pile up in front of the bottleneck, in our Kanban system the user stories/tasks/requests will pile up in the column associated with the bottleneck. To give an example, in a “traditional” software development system, we will see them accumulate in the Deployment to Production column. Now we know where to start looking.

2. Exploit the bottleneck

I have to say that I do not particularly like this term because it does not convey clearly what it means.

It basically involves identifying and assessing the maximum capacity of the bottleneck, analysing what is not happening at that capacity, and making decisions to make that happen. We are likely to be surprised when we see that on many occasions we are not really working at (maximum) capacity, and on other occasions the problem may be caused by waste such as wait times, bugs or a lack of coordination.

It should be noted that the aim of the actions carried out in this step is not to increase the capacity of the bottleneck, but rather to determine why it is not producing the ideal throughput using the resources it has.

One thing that is extremely important at this point is not to implement measures to increase the capacity of the bottleneck, since the objective is the optimum use of what is in place right now. Inexperienced managers often look for the easiest solution, which is elevating the constraint, and take inadequate measures. In the case of human resources, they will try to increase the size of the team without taking into account that this elevation action involves an increase in costs and does not always solve the problem. And as Brooks’ law states, “adding more people to an already delayed project will delay the project even further”. Therefore, this action will not only not solve the problem, but will make it worse.

An inexperienced developer will behave in the same way when faced with a similar problem. If there is a bottleneck in RAM, he/she will immediately ask for more RAM (elevation). This usually does not fix anything because the bottleneck problem has not been resolved, and the right thing to do is to look for the root cause (which may be poor programming).

Returning to the previous example of the bottleneck detected in the deployment phase, we might observe a lack of coordination between the Dev and Ops teams resulting from working in silos that leads to wait times, complex and error-prone deployments, manual deployments, very large batches, etc. In fact, when we look at these aspects, we see that they are the types of waste we identified when we discussed Lean.

Once we have analysed why our bottleneck is not performing at its maximum capacity, we need to make decisions about how to correct this. For example, we can:

- Make the Dev and Ops teams work together, removing the silos.

- Reduce the size of deliveries.

- Maintain a continuous flow using buffering techniques.

- Standardise deliveries.

- etc.

It is important to note that we are not implementing any elevation action that would increase the capacity of the bottleneck. That will be done later.

3. Subordinate everything to the decisions from the previous step

This is the crucial point since it involves changes in the system and requires difficult decisions to be made and implemented. What we need to do is implement the decisions that were made in the previous step and subordinate everything else to those decisions. This seems very radical, but we need to bear in mind the following: any improvement made in a step after the bottleneck will not improve the flow at all because it will continue to be slowed down by the bottleneck, and any improvement made in a previous step will only saturate the bottleneck even more and make the situation worse.

In this step, we are interested in analysing the system holistically, looking to improve the flow as a whole rather than just making local optimisations, since the improvements we make in the bottleneck will directly improve the entire flow of the system.

For example, one of the decisions we made in the previous step was that the Dev and Ops teams would work together. This may mean that the individual work of the two separate teams will be affected by the new way of working, and may even deteriorate, but by improving the flow at the bottleneck we will have achieved a significant increase in the speed of value delivery.

4. Elevate the bottleneck

Once we have managed to optimise the operation of the bottleneck, it is time to increase its capacity if we want to increase the throughput of the system.

For example, we might get to the point in our deployment process where, because we deploy manually, we cannot increase the throughput, but we think that we would be able to if we automated it. In other words, automation is an elevation action and as such should be tackled after the actions to exploit the bottleneck. If we did this earlier we would definitely be automating non-optimised processes and, although we might see an improvement, it would not be entirely beneficial, and the benefit might not even outweigh the cost. It should be borne in mind that elevation actions are often more expensive as they require more resources, and they should therefore be the last to be implemented.

If we notice that, by implementing these types of actions, throughput does not increase, it is likely that the bottleneck has moved to another point within our value stream, and we should move directly to step 5 to identify the new bottleneck.

For example, returning to the earlier problem with RAM, once the system has been optimised, we could increase its memory. However, if the system does not improve, the bottleneck will have moved elsewhere (to the CPU, network or disk storage, for example).

If in our deployment process there comes a point where it does not improve with automation, the bottleneck may have shifted to the business side, for example, and it may not be possible to generate ideas/requests at the rate that the system is capable of making deliveries.

5. Go back to step 1 avoiding system inertia

Once the previous steps have been completed, the most common scenario is that the bottleneck has moved to another point in the system, which means we just have to start again with the search for a new bottleneck.

As we can see, this procedure, when performed systematically, leads to a process of continuous improvement that aims to increase throughput and reduce cycle time on a constant basis. This is particularly important to avoid slipping into a state of inertia, continuing to do things the same way and making the same mistakes.

During this phase, retrospective meetings are also held to examine what works and what does not and to see how improvement fits in perfectly with this quest for continuous improvement. In fact, a very effective way of conducting a retrospective meeting is to think about the current flow constraint, decide what exploitation and/or elevation actions to take and execute them during the next sprint, evaluating the result at the next retrospective.

The Theory of Constraints and DevOps

In the above paragraphs, I have described the steps of the Theory of Constraints along with examples that sought to establish a parallelism with the dynamics of implementing DevOps in a system.

It is true to say that the emergence and development of the DevOps movement follows the sequence described by the theory. The adoption of agilism by developers and the increase in the number of deliveries and speed of development caused the constraint to shift to the operations side.

By analysing why value was not delivered at the speed at which development teams were generating value, it seemed clear that there was a bottleneck in the deployment process. Analysing that bottleneck and making decisions about how to exploit it was what led to the emergence of the DevOps movement.

The clearest decision seemed to be that if the Dev and Ops teams worked together, the throughput of the system would improve, which is exactly what happened in reality. In fact, decisions were made, and others were subordinated in order to implement them. For example, it was necessary to eliminate the decision that the Dev and Ops teams should be separated (driven in the past by the quest for increased system security), with the aim of improving throughput.

Only when the exploitation actions had no further potential did other elements begin to emerge, such as the automation of the deployment process, the provisioning process, etc. This is what caused productivity to skyrocket and not that long ago we were seeing incredible numbers. Now, Flickr’s ten deployments per day seems very low compared to Amazon, which is performing one deployment every 11.6 seconds on average.

Inevitably, the bottleneck moved to another part of the process, and that is why new initiatives are now emerging, such as DevSecOps, where security comes into play. What we have seen is that the new constraint has shifted to the security side and the same actions that worked successfully are beginning to be implemented. Why don’t we integrate the Security team with Dev and Ops? Why is security not a continuous process? Why don’t we automate the security assurance process?

The same could be said about Testing. The quest for continuous and automated testing is being increasingly pursued to meet the demands for speed in value delivery.

In short, consciously or not, the procedure described by the Theory of Constraints is being followed. The companies that implement it in a conscious and systematic way are those that achieve continuous improvement sustained over time and those that are effectively implementing DevOps by adopting a culture of continuous improvement that ties in with the third way of DevOps– the Culture of Continuous Experimentation and Learning.

In Conclusion

In this article, I have provided a brief introduction to the Theory of Constraints and its use in the DevOps world. I did not set out to provide a detailed description of this theory, but rather to explain its concepts in a simple way and explore how they have influenced the development of the DevOps movement, which is what concerns us.

There are other models that promote the creation of a culture of continuous improvement, but the use of the Theory of Constraints is very simple and intuitive, hence its years of success in manufacturing and now in the IT and DevOps world.

In any event, understanding the conclusions drawn and interpreting the Theory of Constraints from a practical point of view has required many hours of work. If you would like to benefit from Xeridia’s experience in this area, please get in touch with us now.

Emiliano Sutil is a Project Manager at Xeridia