So far in this blog, we have talked about the influence of the Lean movement in the DevOps movement. We have also seen how to create a Value Stream Map and how to use it in a DevOps environment. In this new article, I’m going to review a basic concept of DevOps that is again based on Lean thinking. In the world of Lean, this concept is the use of small batches of work. In a DevOps environment, small deliveries or small increments of functionality would be the equivalent of small batches.

As we noted in the post about the Lean movement, one of the most important wastes is excess inventory – also known as work in progress or WIP – that inevitably leads to long cycle times. Reducing this inventory, or reducing the size of the delivery or WIP (effectively the same thing), significantly reduces the cycle time and smooths out the value delivery stream. This is the basis of Little’s Law.

Analysing Little’s Law

To examine the previous statement, we can use the Value Stream Map created in the previous post or a similar one and analyse how features move along the value stream.

In an organisation that does not use small batches, the Post-it® notes or features will start accumulating at each stage on our whiteboard. When a sufficiently large volume, defined by the batch size, is reached, all Post-its are simultaneously passed on to the next stage. For example, those responsible for capturing user requests, requirements, etc. wait until they have a complete requirements document (or at least a sufficient number of requirements) before passing it on to the analysts, who in turn will wait until they have completed the analysis before moving it on to development, and so on. What we are actually seeing is a large batch of work moving through the Value Stream.

Analysing what happens with each feature, we see that, instead of moving immediately to the next stage, it has to wait to move – as inventory – until the batch is completed. The larger the batch, the longer the wait time the feature has to endure, which increases the cycle time.

What would happen if, instead of waiting for the batch to be completed, we changed the strategy and moved the feature block on to the next stage in the value chain as soon as it was ready? The wait time would be reduced to zero and therefore the total cycle time would be significantly reduced.

We can draw an analogy with a situation that we have seen countless times on television or at the cinema, that of a group of people who work together to put out a fire – a Bucket Brigade. They immediately arrange themselves to form a human chain and pass a bucket of water from one person to the next with the intention of ensuring there is a continuous supply of water to dampen the fire. The equivalent strategy in relation to large batches would be for the first person in the chain to wait until, for example, there were ten or more buckets before passing them all on to the next person. How long would it take for the first bucket to reach the fire? It would probably arrive too late.

In software development, we can currently use this strategy to generate large batches, but we run the risk that by the time our product reaches the market it will be too late, just like the set of buckets.

This seems obvious in a situation where speed of delivery is essential, although it seems less obvious in the world of software development despite the fact that, today, time to market is a critical factor.

This situation of creating large batches is equivalent to what we used to see in mass production, where a large volume of items was needed to make manufacturing profitable. We should remember that the Lean movement made it possible for car manufacturing to be profitable without the need to generate those large batches. In the same way, best practice and sound strategies in DevOps make it cost-effective to deliver functionality in small batches rather than in large batches.

Where can large batches be found?

There are many points along the value chain where large batches of work can be found. The following are some examples:

- Long requirements or analysis documents. A requirements document is effectively a batch of work with a multitude of features.

- Large product backlogs. This would be equivalent to the requirements document but following the Scrum framework.

- Longlived branches. Each branch becomes a batch and the longer it lives, the larger it grows.

- A large amount of work started at the same time and not finished.

- Etc.

The aim is to reduce this work in progress. But what are the benefits of this strategy?

Benefits of using small batches

We have seen that the main benefit of using small batches is reducing the cycle time, but we can also highlight other benefits that result in the successful implementation of a DevOps approach.

- A knock-on effect of using small batches is that it forces the Dev and Ops teams to interact more frequently, making it easier to transition from a traditional model to DevOps. Dev passing a delivery to Ops every day is very different to receiving a delivery once a quarter. This interaction creates a strong sense of team spirit.

- The reduction in cycle time resulting from the use of small batches means that feedback increases considerably.

- Making more frequent deliveries improves the process through repetition. If something is hard to do, do it more frequently until it becomes routine. This ties in with the Lean concept of Kata.

- Because the deliveries are smaller, the risk associated with them is drastically reduced.

- By reducing the complexity of deliveries, the review and validation processes are simplified.

- As we already mentioned, wait times are significantly reduced and we eliminate a major source of waste.

- The flow of work within the value stream is smoothed out, resulting in a significant increase in the stability and predictability of the process.

- It is possible to detect errors early, improving quality, a fundamental aspect of DevOps.

- Last but not least, implementing the deployment pipeline and continuous delivery becomes much easier. Attempting continuous delivery with large deliveries does not seem feasible.

As we can see, there are many benefits of using small batches and they alone justify the change from one model to another.

Methods for working with small batches

Although it may appear simple, changing our work methodology is, in my view, radical and difficult.

At the definition level, changing from using the traditional concept of requirements to using user stories is fundamental. The problem with user stories is that it is not easy to create them following the INVEST principle. The letters I (Independent) and S (Small) in this acronym are the ones that show us how it relates to small batches. If I manage to create small and independent user stories, I will be able to make small deliveries.

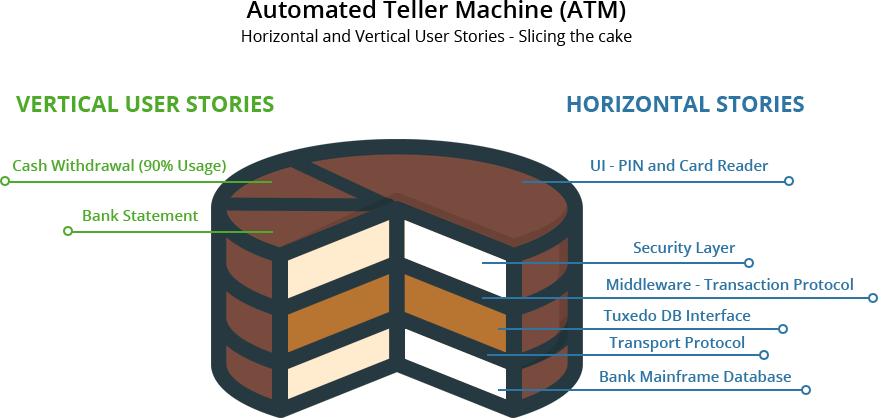

This links to the concept of Vertical Requirements where functionality is delivered from all application layers, as opposed to horizontal requirements that build up layer by layer.

Source: Delta Matrix

There are several methods for splitting user stories in this way, such as the hamburger method or the SPIDR technique. The important thing at this point is to be able to split a particular feature into user stories that we can deliver in small batches.

At the development level, once we have a good set of user stories, we can use techniques such as the feature branch or feature flags to bring us closer to the concept of continuous delivery, or even think about using a microservices architecture.

Conclusion

The famous presentation "10+ Deploys Per Day: Dev and Ops Cooperation at Flickr", which marks one of the milestones in the DevOps movement, makes explicit reference to the use of "small frequent changes". The presentation makes it clear that one factor that made it possible to make this number of daily deliveries was the size of the release. If that release consists of changing a single line of code, it would be very easy to implement continuous delivery. As the size increases, so does the difficulty, exponentially. In fact, many failed DevOps implementations are caused by an attempt to automate the delivery process using “big bang releases”. In that situation, the complexity and difficulty are too great.

The first step should be to analyse the delivery process and keep moving towards smaller deliveries. Once this has been achieved, the deployment pipeline could be automated.

In this article, I wanted to reflect how a strategic decision as simple as the size of releases has a direct result on our ability to deliver continuously.

Since this continuous delivery, or at least rapid delivery, is a basic pillar of DevOps, we can state that using small batches is fundamental to a successful implementation of DevOps.

And although it may seem simple, it is not a trivial matter at all. It is therefore very important that you discuss any questions you have or any changes you are considering with a team of experts such as those here at Xeridia.

Emiliano Sutil is a Project Manager at Xeridia