In recent years, the amount of data being generated has increased considerably. Today, in just one second, almost 9,000 tweets are posted, more than 900 photos are uploaded to Instagram, more than 80,000 Google searches are performed and nearly 3 million emails are sent. This simply proves that we’re generating a huge amount of information every day. According to the World Economic Forum, 463 exabytes of data will be generated daily by 2025. This is equivalent to more than 212 million DVDs [1].

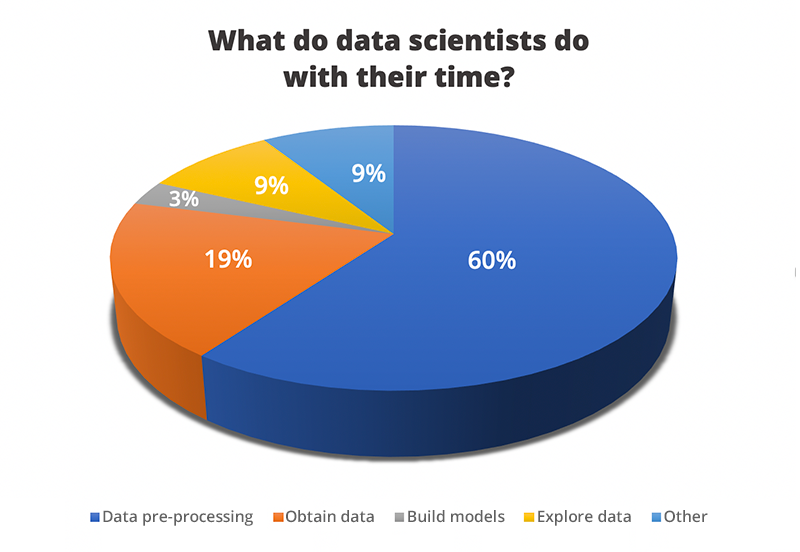

Just as a cook needs to wash the vegetables, peel the potatoes, marinate the meat and carefully measure the quantities before preparing a dish (among other things), a data scientist needs to perform a number of preliminary operations before he or she can begin to solve the problem being tackled, to make the data “edible”. Due to the large amount of data generated, this initial step is often crucial, firstly to correct multiple deficiencies that we might come across, and secondly to ensure that we can extract the specific information relevant to our problem. According to several surveys of data scientists, about 80% of their working time is focused on obtaining, cleansing and organising data, while only 3% is spent on building machine learning models [2].

As we’ve discussed in previous posts, solving a machine learning problem involves optimising a mathematical function. To satisfy this premise, we have to work with numerical data that can be used in a mathematical context.

Generally, when we receive a data set, in addition to identifying numerical values, we usually have to work with unstructured data. This is data that does not conform to a particular schema, such as text, images, videos, etc. If we want to be able to use this data to train our model, it must all be converted into numbers. In this post, we’re going to talk about data cleansing tools. In the next Xeridia blog post on artificial intelligence, we’ll talk about transformation tools in more depth.

Data Cleansing Techniques in Artificial Intelligence

Data cleansing tools allow us to fix specific errors that occur in the data set we’re dealing with. These are errors that could have a negative effect on, and interfere with, machine learning. The most common defects that are usually fixed with these tools are:

- Missing values: When working with a large data set, it’s very common for some values to be empty. This can happen for myriad reasons (no actual measurement, an error when storing the information, an error when retrieving it, etc.). The main problem with these missing values is that they can prevent the machine learning system from being trained correctly. This is because a missing value is not a number, so it cannot be processed numerically. To solve this problem, there are several approaches that can be used depending on the data we’re dealing with:

- Interpolation: If we’re dealing with time-related data, an often-used policy is to interpolate the missing data based on the nearby data points.

- Populate with a fixed value: This could be the mean, the mode or even the value 0 (zero).

- Populate using regression: There are techniques that try to predict the missing value using the other variables in our data set. One of these is MICE (Multivariate Imputation by Chained Equation) [3].

- Consider the empty value as a category: If the variable with missing data is used for categorisation purposes, we can add an extra category that groups together all those data points where this field is empty.

- Delete the entire record: If none of the above techniques are suitable for populating the empty values, we can sometimes choose to discard the record and work only with those that are complete.

- Data inconsistency: When we’re processing data, we often detect data format or data type errors. This may be due to a data reading error or to poor data storage. For example, our data set may contain dates that usually begin with the day of the month but in certain records begin with the year, or values that should be numerical but include other types of characters, etc. Multiple validation checks need to be carried out, and any problems identified and resolved, before the data can be deemed consistent. At this stage, there are several validation techniques that could be used depending on the variable being analysed. Sometimes, this requires expert knowledge of the problem.

- Duplicate values: We may find at some point that we have duplicate records in our dataset. It’s important to detect these records and delete all those that are duplicates of an existing record. Not doing this could mean that the machine learning method gives more weight to the duplicate element than the rest of the data, and the model is then trained in a biased way.

- Outliers: Because of storage, measurement or data insertion errors, outliers (anomalous data) may appear in some of our fields. These values can substantially distort the distribution of the data and thus affect the entire learning process. There are many techniques that can be used to try to detect these outliers. We’ll expand on this topic in future posts.

In our next blog post, we’ll look at the data transformation tools we use to tailor all the information we have so that it’s valid for training a machine learning model.

Oscar García-Olalla Olivera is a Data Scientist and R&D Engineer at Xeridia

[1] How much data is generated each day? (visitado en 02/2020)

[2] Cleaning Big Data: Most Time-Consuming, Least Enjoyable Data Science Task, Survey Says (visitado en 02/2020)

[3] Azur MJ, Stuart EA, Frangakis C, Leaf PJ. Multiple imputation by chained equations: what is it and how does it work?. Int J Methods Psychiatr Res. 2011;20(1):40–49. doi:10.1002/mpr.329