Have you ever wondered how it is possible to perform automated processing on an image to draw a complex conclusion of such magnitude as to be able to detect the presence of an object?

As living beings with the ability to see, we have biological sensors that allow us to receive sequences of images from the world around us and interpret them, extracting concepts and reaching conclusions as complex as the one just mentioned with very little difficulty. By contrast, a computer, even when connected to a camera, operates under instructions deliberately written by a programmer. This means that, in order to achieve functionality similar to that described above, a programmer would need to code all the conditions that make identifying the presence of a particular object in an image possible.

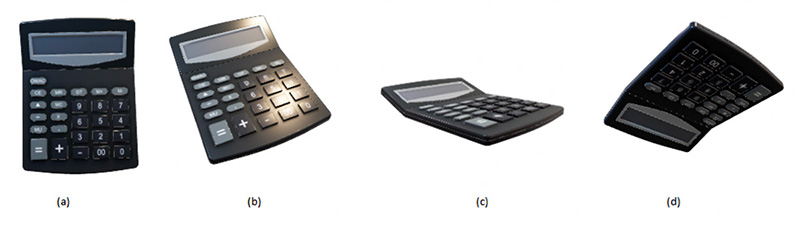

Can you imagine how many conditions would be required to determine that each of these is an image of a calculator?

How many more conditions would be required to ensure that these two images are not confused with calculators?

And if we picked a new image of a calculator, would those conditions be sufficient to detect the calculator in the image unequivocally?

A programmer could create conditions using the pixel colours of the images he or she receives in order to differentiate between the images of the calculator and those of the laptop computer. However, as we can see in the examples, there can be countless different images of the same object depending on how the position of the object is changed, the distance at which the photo was taken, the lighting at the time it was taken or even whether the camera was slightly rotated.

This is known as the problem of invariants (which we will talk about in a later blog post), and it is difficult for a programmer to write a condition-based method that can generalise so that, when an image of an object that has never been used before is presented to it, the computer can identify it correctly.

Can this kind of problem be solved by a computer? How do living beings do it? These are the questions we will answer in this article.

What is an image in the context of Computer Vision?

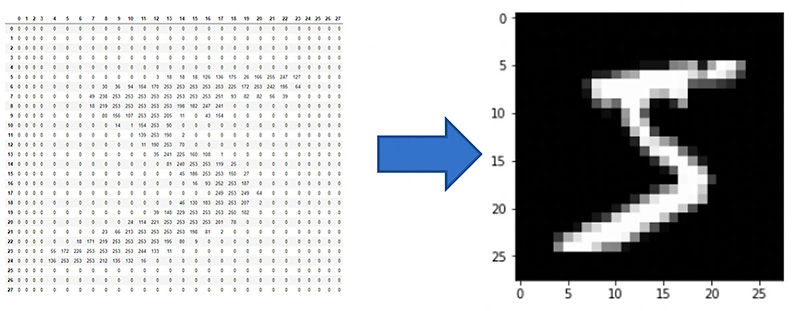

An image is a matrix of pixels, and each pixel is typically a composition of the colours red, green and blue (and sometimes an extra channel, the alpha value, representing the level of transparency). This colour model is known as RGB (three colours) or RGBA (three + alpha), because this same order (Red, Green, Blue) is generally observed.

Our perception of an image is the result of the combination of these three channels. However, at the computational level, images are represented numerically:

Figure 1. The digit “5” drawn in greyscale (a single channel), extracted from the MNIST dataset. [1]

One distinctive feature of the images is that they frequently represent disordered information mixed with noise. Images store a large amount of information, of which only a fraction is considered important for performing an intelligent task.

How did Computer Vision come about?

Computer Vision is a branch of Artificial Intelligence that emerged from the need to imitate the intelligent behaviour exhibited by living beings in how they process images. Being able to detect objects, categorise them, segment them or process them from images are just some of the many tasks that can be tackled by computer vision.

To be able to carry out tasks such as those mentioned, computer vision focuses on an aspect that is considered one of its pillars, namely extracting the relevant information for the desired purpose. This task is called description, something that people perform constantly and a fundamental step in the extraction of knowledge:

” A description allows information to be represented using, typically, less information than there was initially, leaving only those attributes or characteristics that allow an entity, such as an object, to be identified.”

The simpler a description is, the greater the number of cases that can fit into it, therefore the greater the capacity for generalisation, but also the greater the likelihood that it will cover cases that it should not. That is why the aim is to look for a description that is general enough to cover the right cases, but restrictive enough not to cover the wrong cases.

For example, in the case of the images of calculators, a possible description general enough to fit all of them and restrictive enough not to cover the laptops would be to extract the number of edges they present visually within the image, the size of their screen and/or the number of buttons. We can then set thresholds and combinations of values for these characteristics that match the description to a calculator, such as the number of edges being four, the size of the screen being less than half the size of the object and the number of buttons being around 26.

In future blog posts we will talk in greater detail about the action of describing, how this task can be automated and how, together with Machine Learning, it serves as a basis for solving not only Computer Vision problems, but also problems in most fields of Artificial Intelligence.

Iván de Paz Centeno is a Data Scientist and R&D Engineer at Xeridia

[1] The MNIST dataset is a collection og 60,000 handwritten, greyscale images of digits (0-9) that are 28×28 pixels in size. Reference: Y. Lecun, L. Bottou, Y.Bengio and P. Haffner. “Gradient-based learning applied to document recognition.” Proceedings of the IEEE, 86(11):2278-2324, November 1998.